Quantitative Fairness--A Framework For The Design Of Equitable Cybernetic Societies

Image credit: Kevin Riehl

Image credit: Kevin RiehlAbstract

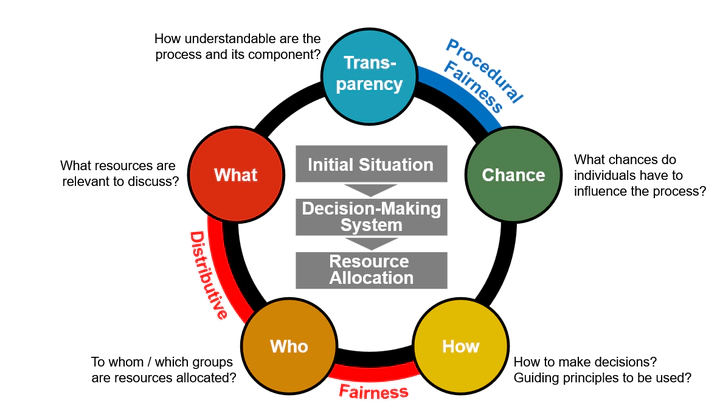

Advancements in computer science, artificial intelligence, and control systems have catalyzed the emergence of cybernetic societies, where algorithms play a pivotal role in decision-making processes shaping nearly every aspect of human life. Automated decision-making for resource allocation has expanded into industry, government processes, critical infrastructures, and even determines the very fabric of social interactions and communication. While these systems promise greater efficiency and reduced corruption, misspecified cybernetic mechanisms harbor the threat for reinforcing inequities, discrimination, and even dystopian or totalitarian structures. Fairness thus becomes a crucial component in the design of cybernetic systems, to promote cooperation between selfish individuals, to achieve better outcomes at the system level, to confront public resistance, to gain trust and acceptance for rules and institutions, to perforate self-reinforcing cycles of poverty through social mobility, to incentivize motivation, contribution and satisfaction of people through inclusion, to increase social-cohesion in groups, and ultimately to improve life quality. Quantitative descriptions of fairness are crucial to reflect equity into algorithms, but only few works in the fairness literature offer such measures; the existing quantitative measures in the literature are either too application-specific, suffer from undesirable characteristics, or are not ideology-agnostic. This study proposes a quantitative, transactional, and distributive fairness framework based on an interdisciplinary foundation that supports the systematic design of socially-feasible decision-making systems. Moreover, it emphasizes the importance of fairness and transparency when designing algorithms for equitable, cybernetic societies, and establishes a connection between fairness literature and resource allocating systems.